VR strikes back at MPEG

VRTogether project partners Motion Spell, TNO and CWI participated at the last MPEG meetings (#122 and #123) in San Diego and Ljubljana with the intention of getting brand-new feedback around Virtual Reality. It appears that VR activities blossom in many fields: MPEG-I, OMAF, Point clouds, NBMP, MPEG-MORE. The long-term trend shows that VR is coming back on the scene and will soon catch up onto the market.

This article focuses on Point Clouds and MPEG-MORE. The first part of this article is covering all the other technologies.

Point Cloud Compression

MPEG-I targets future immersive applications. Part 5 of this standard specifies Point Cloud Compression (PCC).

A point cloud is defined as a set of points in the 3D space. Each point is identified by its cartesian coordinates (x,y,z), referred to as spatial attributes, as well as other attributes, such as a color, a normal, a reflectance value, etc. There are no restrictions on the attributes associated with each point.

Point clouds allow representing volumetric signals. Because of their simplicity and versatility, they are important for emerging AR and VR applications. Point clouds are usually captured using multiple RGB plus depth sensors. A point cloud can contain millions of points in order to create a photorealistic reconstruction of an object. Compression of point clouds is essential to efficiently store and transmit volumetric data for applications such as tele-immersive video and free-viewpoint sports replays, as well as for innovative medical and robotic applications.

MPEG has a separate activity on point cloud compression: in April 2017 MPEG issued a Call for Proposals (CfP) on PCC technologies, seeking compression proposals in three categories:

- Static frames

- Dynamic sequences

- Dynamically acquired/fused point clouds

Leading technology companies responded to the CfP, and the proposals were assessed in October 2017. In addition to objective metrics, each proposal was also evaluated through subjective tests, performed at GBTech and CWI. The winning projects were selected as “Test Models” for the next step of the standardization activity.

For the compression of dynamic sequences, it was found that compression performance can be significantly improved by leveraging existing video codecs after performing a 3D to 2D conversion using a suitable mapping scheme. This also allows the use of hardware acceleration of existing video codecs, which is supported by many current generation GPUs. Thus, synergies with existing hardware and software infrastructure can allow rapid deployment of new immersive experiences.

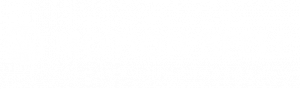

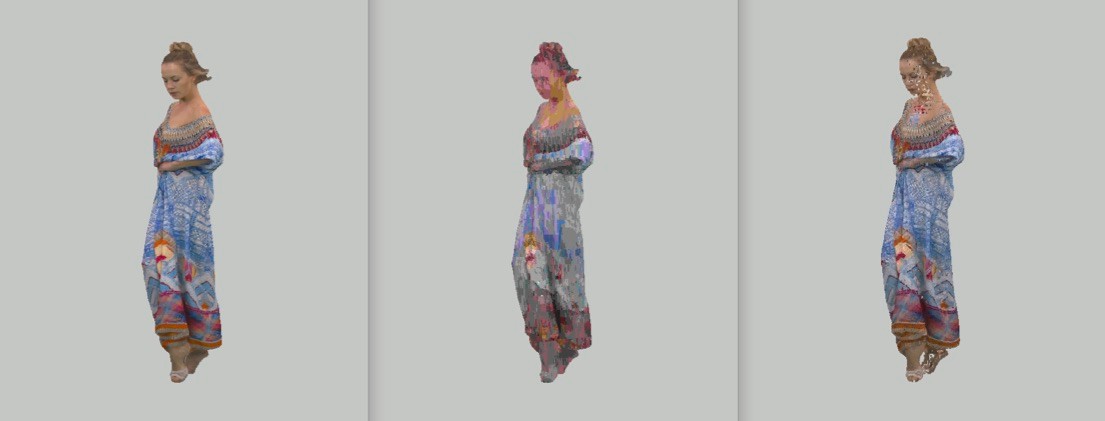

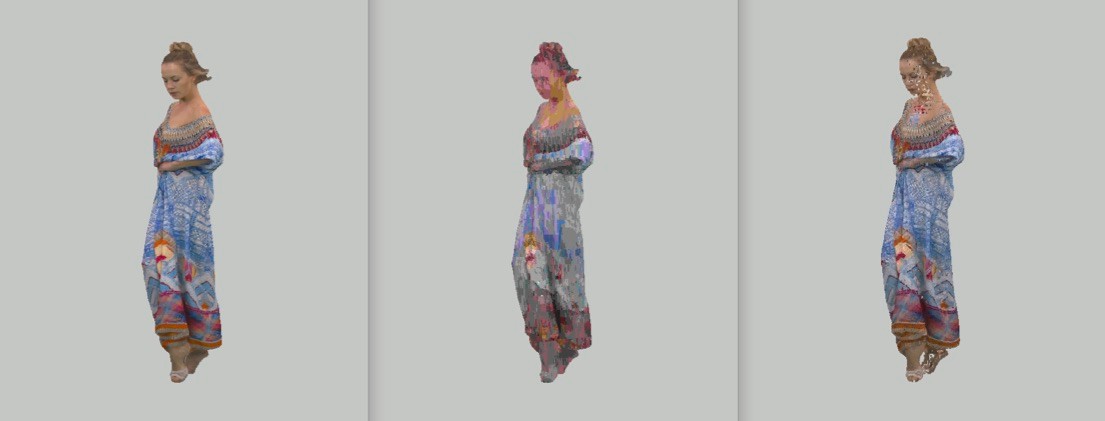

Figure 1: An example of a perspective view of a point cloud: the original version on the left, and two views of compressed versions of the same point cloud in the middle and on the right.

After the selection of the Test Models that combine the best performing technologies, the activity focused on the identification and investigation of methods to optimize the Test Models, by performing “Core Experiments”. Examples of Core Experiments include the comparison of different schemes for mapping the texture information from 3D to 2D, the analysis of hybrid codecs that combine 3D geometry compression techniques with traditional block-based video compression strategies, and the use of motion field coding. These Core Experiments are still ongoing.

At the last MPEG meetings, the PCC activity has been particularly crowded, attracting attention from many industrial partners. The main activities of the group focused on cross-checking test models and reviewing the results of the core experiments. In addition, some new datasets created by commercial companies (8i, Owlii, Samsung, Fraunhofer) were presented, and a proposal to merge two of the Test Models was presented; the goal was to take advantage of the 2D compression technics (HEVC and successors). A preliminary contribution also explored the delivery and transmission of point clouds based on this approach.

In the VRTogether project, CWI is providing a solution for lossy compression of dynamic point clouds, based on the open source software for Point Cloud Compression developed at CWI (available at https://github.com/cwi-dis/cwi-pcl-codec). This solution will not be competing in the standardization race, but it serves as an open source tool to benchmark different solutions and experiment research ideas. Currently, it is being integrated into the VRTogether DASH-based point cloud communication pipeline that will allow multiple users to see each other point cloud representation, captured in real time, and rendered in the same virtual environment. Part of CWI research within the VRTogether project will also focus on the design of new objective quality metrics for evaluating point clouds, based on the study of human perception of volumetric signals.

MPEG-MORE

MPEG‘s Media Orchestration standard (also known as MORE: MPEG-B part 13- https://mpeg.chiariglione.org/standards/mpeg-b/media-orchestration) has been finalized by the committee and the final edited version has been submitted to MPEG’s parent body and ISO for one more yes/no ballot followed by publication. This last step is a mere formality. It is a bit hard to estimate when the specification will be published by ISO since it requires some secretariat work and this can take quite a few months. The work on Reference Content and SW continues. This work intends to make content available with MORE metadata so that (potential) users of the MORE specification can understand how the specification works and are assisted in creating implementations.

In the meantime, Social VR has become more important in MPEG, and it looks like some of the requirements can be fulfilled by the MORE specification. This notably applies to the simpler forms of Social VR, where images of users are composited into a VR360 experience. This requires both temporal synchronization (multiple users should experience the same scene simultaneously) and spatial coordination (the composited images for all users need to have consistent location and size for the experience to be perceived as realistic and compelling). MORE defines the necessary metadata and protocols for this.

About MPEG

MPEG is the Moving Picture Experts Group, a group from IEC and ISO which created some of the foundations of the multimedia industry: the MPEG-2 Transport Stream, and the MP4 file format, a series of successful codecs both in video (MPEG-2 Video, AVC/H264) and audio (MP3, AAC). A new generation (MPEG-H) emerged in 2013 with MPEG 3D Audio, HEVC and MMT, and other activities in MPEG-I like Point Cloud, MPEG Orchestration.

Who we are

Motion Spell is an SME specialized in audio-visual media technologies. Motion Spell was created in 2013 in Paris, France. On a conceptual and technical level, Motion Spell will focus on the development of transmission open tools. Furthermore, Motion Spell plans to explore encoding requirements for VR to participate in the current standardization efforts and first implementations. Finally we will also assist on the playback side to ensure the end-to-end workflow is covered.

Come and follow us in this VR journey with i2CAT, CWI, TNO, CERTH, Artanim, Viaccess-Orca, Entropy Studio.