The comeback of VR at MPEG

The general feeling from the MPEG community is that Virtual Reality (VR) made a false start. The Oculus rift’s acquisition (2014) for $2bn created a premature launch of a funding bubble that exploded in early 2017. However, the long-term trend shows that VR is coming back on the scene and will soon catch up into the market. As a VRTogether project partner, Motion Spell has great expectations about VR. Our participation at the last MPEG meeting (#122) in San Diego confirmed that activities regarding VR blossom on every field: MPEG-I, OMAF, Point clouds, NBMP, MPEG-MORE.

MPEG

MPEG is the Motion Picture Expert Group, a group from IEC and ISO that created some of the foundations of the multimedia industry: MPEG-2 TS and the MP4 file format, a series of successful codecs both in video (MPEG-2 Video, AVC/H264) and audio (MP3, AAC). A new generation (MPEG-H) emerged in 2013 with MPEG 3D Audio, HEVC and MMT and other activities like MPEG-I (more below).

The GPAC team and its commercial arm (GPAC Licensing), which is led by Motion Spell, are active contributors at MPEG.

MPEG meetings are organized as a set of thematic meeting rooms that represent different working groups. Each working group follows its way from requirements to a working draft and then to an international standard. Each MPEG meeting gathers around 500 participants from all over the world.

MPEG-I: Coded Representation of Immersive Media

Since mid-2017, MPEG has started to work on MPEG-I. MPEG-I targets future immersive applications. The goal of this new standard is to enable various forms of audio-visual immersion, including panoramic video with 2D and 3D audio, with various degrees of true 3D visual perception (leaning toward 6 degrees of freedom). This full standard has already reached a relevant state that forces us to take it into account in VRTogether.

MPEG-I is a set of standards defining the future of media, which currently comprises eight parts:

• Part 1: Requirements – Technical Report on Immersive Media

• Part 2: OMAF – Omnidirectional Media Format

• Part 3: Versatile Video Coding

• Part 4: Immersive Audio Coding

• Part 5: Point Cloud Compression

• Part 6: Immersive Media Metrics

• Part 7: Immersive Media Metadata

• Part 8: NBMP – Network-Based Media Processing

In this article, we will focus on parts 1, 2, 3 and 8.

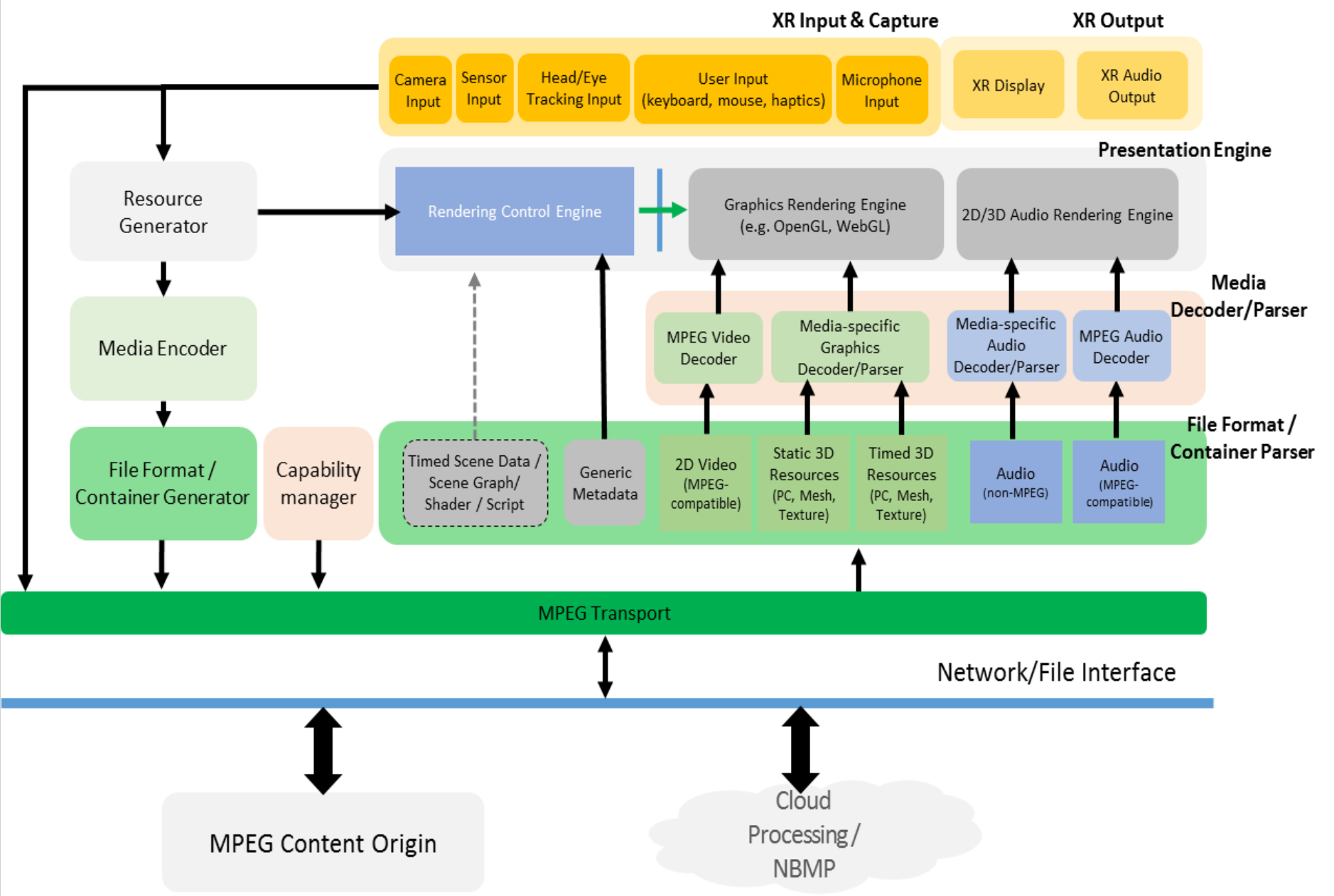

Architecture

MPEG-I exposes a set of architectures rather than just one:

Part 1: Requirements

MPEG-I requirements are divided into phases:

- Phase 1a: Captured image based multi-view encoding/decoding (currently finalizing standardization)

- Phase 1b: Video-based multi-view encoding/decoding. Mostly 3DoF and some 3DoF+. VR and interactivity activities are likely to be split.

- Phase 2: Video + additional data (depth, point cloud) based multi-view encoding/decoding. This phase allows to take into account 6DoF with limited freedom (Omnidirectional 6DoF, Windowed 6DoF) and to Synthesize points of view from fixed cameras

Part 2: OMAF

OMAF is a profile explanation on how to use the MPEG tools with omnidirectional media. OMAF kicked-off its activity towards a 2nd edition enabling support for 3DoF+ and social VR with the plan going to Committee Draft (CD) in October 2018.

Additionally, there is a test framework proposed which allows assessing the performance of various CMAF tools. Its main focus is on video, but MPEG’s audio subgroup has a similar framework to enable subjective testing. It could be interesting seeing these two frameworks combined in one way or the other.

OMAF implies new ISOBMFF/MP4 boxes and models. This is very interesting to follow for VRTogether, but it might be too complex to implement at this stage of specification. In addition, OMAF targets the newest technologies while VRTogether wants to deploy with existing ones. There is room for MPEG contributions in this area.

OMAF also specifies some support for timed metadata (like timed text for subtitle) that has to be followed as well. Our current workflow implies many static parameters on the capture or rendering sides that may become dynamic.

Part 3: Versatile Video Coding

This part focuses on immersive video coding which will be a successor of HEVC. The name VVC (Versatile Video Coding) was hand voted at the meeting. Current experiments show that VVC codec can outperform HEVC by 40%. The release of VVC coding is planned for October 2020.

Part 8: Network-Based Media Processing

Network-Based Media Processing (NBMP) is a framework that allows service providers and end-users to describe media processing operations that are to be performed by the network. NBMP describes the composition of network-based media processing services out of a set of network-based media processing functions and makes these NBMP services accessible through Application Programming Interfaces (APIs).

Motion Spell, partner of the VRTogether project, has decided to attend some sessions on NBMP during the 122 MPEG meeting since this new activity that allows building media workflows has generally been ignored so far. One of the main use-case covered by the output of the previous MPEG meeting in Gwangju included the ingest of media for distribution. Unified Streaming, co-chaired, wants to standardize ingest. Indeed, this is very exciting for VRTogether; Motion Spell will be interested in implementing an ingest component. For example, it could be useful to compare our low latency ingest implementation with the status of this standardization effort by the end of the project.

TNO, also a partner of the VRTogether project, proposed a contribution that puts the focus on extending scene description for 3D environment in the scope of NBMP. This contribution also exposed a tentative list of NBMP functions that could be useful for Social VR:

- Background removal.

- User detection and scaling.

- Room composition without users.

- Room composition with users.

- Low-latency 3DOF encoding.

- Network-based media synchronization.

- 3D audio mixing functionality.

- Lip-sync compensation.

Who we are

Motion Spell is an SME specialized in audio-visual media technologies. Motion Spell was created in 2013 in Paris, France. On a conceptual and technical level, Motion Spell will focus on the development of transmission open tools. Furthermore, Motion Spell plans to explore encoding requirements for VR to participate in the current standardization efforts and first implementations. Finally we will also assist on the playback side to ensure the end-to-end workflow is covered.

Come and follow us in this VR journey with i2CAT, CWI, TNO, CERTH, Artanim, Viaccess-Orca, Entropy Studio.

This project has been funded by the European Commission as part of the H2020 program, under the grant agreement 762111.