HUMAN4D: Human-Centric Multimodal Dataset for Motions and Immersive Media created by VRTOGETHER’s consortium members CERTH, CWI, Artanim

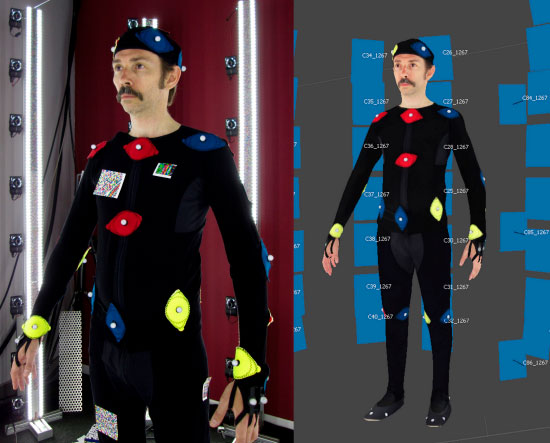

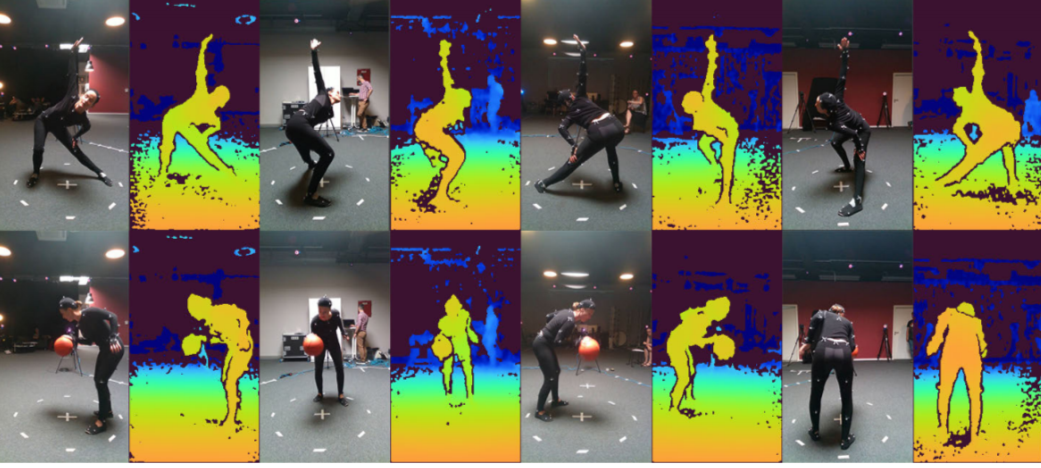

HUMAN4D is a new multimodal human-centric 4D dataset containing a large corpus with more than 50K samples. By capturing 2 female and 2 male professional actors performing various full-body movements and expressions, HUMAN4D provides a diverse set of motions and poses encountered as part of single- and multi-person daily, physical and social activities (jumping, dancing, etc.), along with multi-RGBD (mRGBD), volumetric and audio data.

Despite the existence of multi-view color datasets captured with the use of hardware (HW) synchronization, HUMAN4D is the first and only public resource that provides volumetric depth maps with high synchronization precision due to the use of intra- and inter-sensor HW-SYNC.

VRTogether consortium members CERTH, CWI and Artanim made all the data (http://dx.doi.org/10.21227/xjzb-4y45) and code (https://github.com/tofis/human4d_dataset) available online, including the respective synchronization, calibration and camera parameters, along with data loaders and other processing, visualization and evaluation tools, for academic use and further research.

The involved consortium members commit to continuously maintain the dataset for the community by adding new tools, baselines and captures. Despite the continuous maintenance of the dataset, benchmarking subsets will remain constant to allow the assessment and comparison between new state-of-the-art methods on the same datasets.

HUMAN4D and its associated tools will stimulate further research in computer vision and data driven approaches, enabling research on human pose estimation, real-time volumetric video reconstruction and compression, with the use of consumer-grade RGBD cameras sensors.

Come and follow us in this VR journey with i2CAT, CWI, TNO, CERTH, Artanim, Viaccess-Orca, TheMo and Motion Spell.

This project has been funded by the European Commission as part of the H2020 program, under the grant agreement 762111.