User Lab

VRTogether aims at offering photo-realistic Social VR experiences between remote people, using off-the-shelf and affordable equipment, and over current-day network connections.

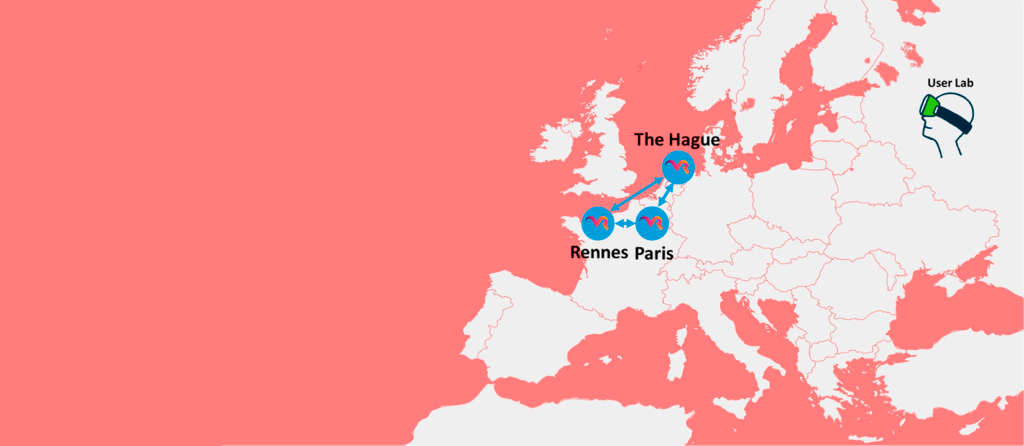

To achieve the targeted goals and demonstrate the project outcomes, the consortium has set up labs with the appropriate resources and infrastructure. The labs not only allow for internal and local experimentation, but also to enable cross-country connected tests and experiences.

This section provides an overview of the available labs for both the native and web versions of the VR-Together platform. These connected labs constitute the VRTogether User Lab.

User Lab for Web VRTogether Platform

The User Lab for the Web VRTogether platform connects the facilities at: The Hague (TNO, The Netherlands), Rennes (Viaccess-Orca, France) and Paris (Viaccess-Orca, France). Each distributed lab node can allocate at least two simultaneous users.

TNO’s Lab node at The Hague (Netherlands)

The TNO node is part of the Medialab. It includes the following resources and infrastructure:

- Azure Kinect, RealSense and Kinect2 sensors

- Oculus Rift and Gear, HP Reverb, Vive and other headsets

- MSI and AlienWare development laptops

- Dell workstations

- Looking Glass (15.6” and 8k)

- GPU equipped servers (TNO research cloud)

Viaccess-Orca ’s Lab node at Rennes (France)

It includes the following resources and infrastructure:

- One open space with 2 independent lite capture systems (single camera rig)

- 2 Kinect v2

- 1 Intel RealSense D435

- 2 Oculus Rift (CV1 & CV2)

- 2 Asus RoG portable stations

- 1 server hosting the Orchestration and SFU cloud services (located on VO premises)

Viaccess-Orca ’s Lab node at Paris (France)

It includes the following resources and infrastructure:

- Showroom space with one lite capture system (single camera rig) + a teleconferencing setup (video camera based)

- 1 Kinect v2

- 1 Oculus Rift (CV1)

- 1 VR station

User Lab for Native VRTogether Platform

The User Lab for the Native VRTogether platform connects the facilities at: Barcelona (i2CAT, Spain), Amsterdam (CWI, The Netherlands) and Thessaloniki (CERTH, Greece). Each distributed lab node can allocate up to two simultaneous users.

i2CAT’s Lab node at Barcelona (Spain)

It includes the following resources and infrastructure:

- 2 room with a full capture system each (Kinect v2 and Intel RealSense sensors)

- 4 Azure Kinect sensors

- 2 Oculus Rift

- 1 HTC Vive

- 2 MagicLeap Headsets

- 1 server for the Point Cloud Multi-Control Unit (PC-MCU)

CWI ’s Lab node at Amsterdam (The Netherlands)

It includes the following resources and infrastructure:

- 2 rooms with a full capture system each

- 2 Oculus Rift

- 8 RealSense D415 sensors

- 2 Reconstruction workstations

- 4 NUCs for TVM capture

CERTH ’s Lab node at Thessaloniki (Greece)

It includes the following resources and infrastructure:

- 11 Intel® RealSense™ depth cameras D415

- 4 Intel® RealSense™ depth cameras D435

- 8 Kinect Azure Development Kits

- 4 NUCs used as capturers

- 4 laptops used as capturers

- 2 workstation PCs used for volumetric capturing

- 2 Playout powerful laptops

- 2 Oculus Rift

- 1 HTC Vive

User Lab Experiments

Throughout the project lifetime, many technical and user experiments are being conducted at each VR-Together lab node, but also between the nodes. In addition, datasets are being created, and Demos are being showcased not only at the User Lab facilities, but also at international events and conferences.

Next, the User Lab Calendars for each year of the project are briefly sketched.

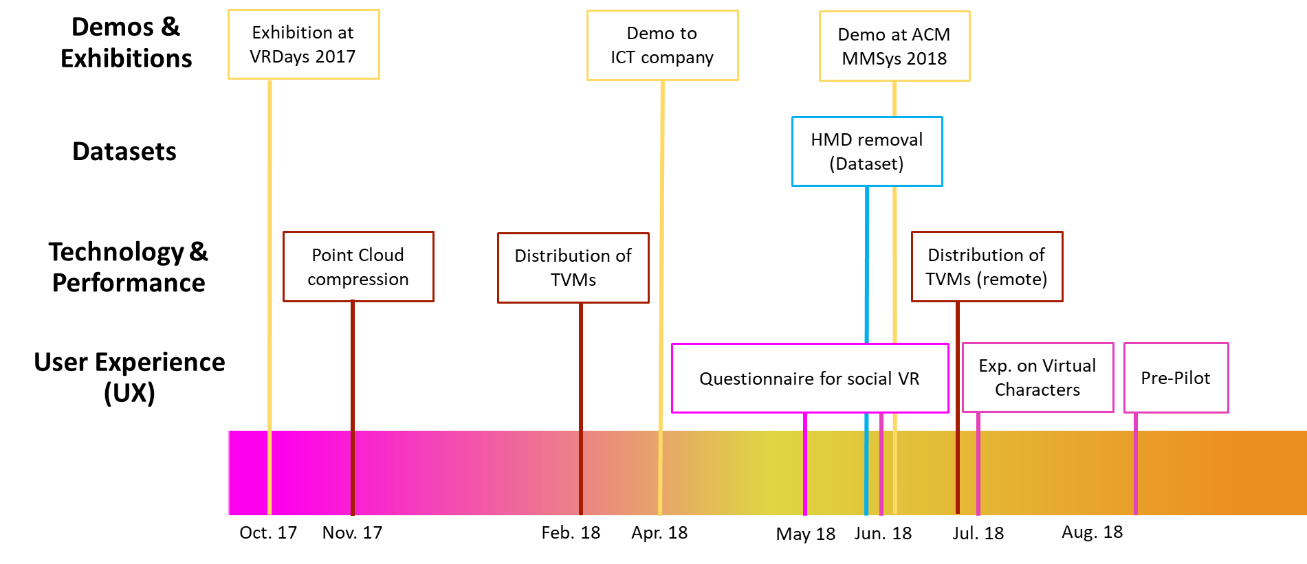

User Lab Calendar – Year 1

Key Pilot Actions (Year 1):

- Exhibition at VRDays 2017: A demo of the web platform was showcased, and feedback was collected from users about the relevance and importance of social VR in general and most important use cases in social VR in particular. 91 users experienced with our demo.

- Point Cloud Compression: study that explored the objective and subjective quality assessment of point cloud compression. Through experimentation (n=23 users), objective quality metrics for point cloud compression based on color distribution were proposed, by comparing the performance with the commonly used geometry-based metrics.

- Distribution of TVMs: Set of technical experiments to assess the readiness for distributing the TVM-based users’ representation in local and distributed scenarios, by gathering key performance metrics (delay, bandwidth, frames per second…).

- Questionnaire for social VR: A new questionnaire for evaluating social VR experiences, combining presence, immersion and togetherness. Applied to a photo sharing experience.

- Pre-pilot: A new experimental protocol for evaluating social VR, applied for the comparison between three experiences: a) face-to-face (baseline); b) Facebook Spaces (avatar-based); and c) web VR-Together platform (video-based realistic representations).

Details about all Year 1 pilot actions are provided in Deliverable D4.2.

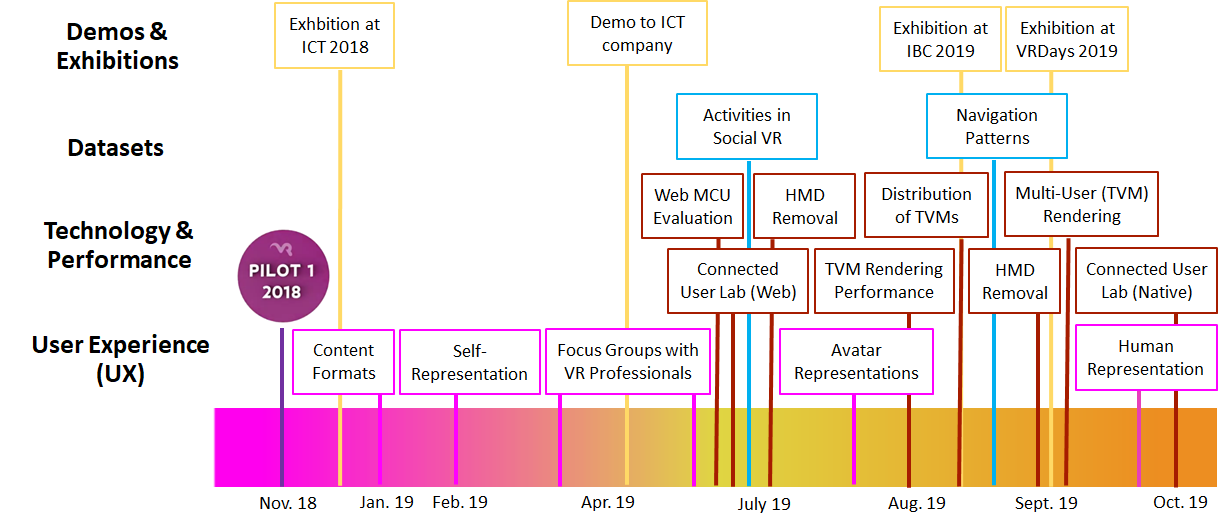

User Lab Calendar – Year 2

Key Pilot Actions (Year 2):

- Pilot 1: User tests (n=30 participants) in which the pilot 1 experience was showcased. The results show that the developed technology and experience jointly provide satisfactory levels of presence, interaction and togetherness, and that Social VR can have a big impact in different use cases.

- Exhibition at IBC 2019: A demo of the VR-Together experience, using the technology and content from the first year of the project, was showcased at the Future Zone of IBC 2019. More than 300 visitors were able to try out our social VR experience, while more than 6.000 visitors visited our booth

- Content Formats: Experiment (n=24 participants) assessing the impact on the user experience of adopting different combinations of content formats for the VR scenes: 1) Full 3D environment; 2) stereoscopic VR360 video scenes; 2) Hybrid 3D environment + 2D billboards for scene dynamics. It was shown that the proposed hybrid approach provides the best user experience.

- Focus Groups with Professionals: Focus groups with representatives of 25 VR companies to obtain feedback about the potential and applicability of Social VR, about the project outcomes, and the strategy to follow toward an efficient adoption in the commercial landscape .

- HMD Removal: Two subjective studies on this topic were conducted. The first one focused on assessing a proposed HMD removal method, by leveraging on the dataset created in year 1. The second one focused on investigating the impact of HMD removal on the quality of dyadic (two-person) communication, when two user users sat at a table in a shared VR environment, and were instructed to perform a role-playing task.

- Activities in Social VR Dataset: An innovative dataset was created using different modalities for user performance captures (appearance, body movements, audio sequences). The purpose of this dataset was threefold; a) challenging actions were captured to allow the investigation of pose estimation using spatio-temporally aligned multi-RGBD data, b) multi-person activities along with audio data were captured to include social interaction sequences and c) the scanned 3D character will allow the comparison between user representations of different types, i.e., time varying mesh, 3D character and point cloud.

Details about all Year 2 pilot actions are provided in Deliverable D4.4.

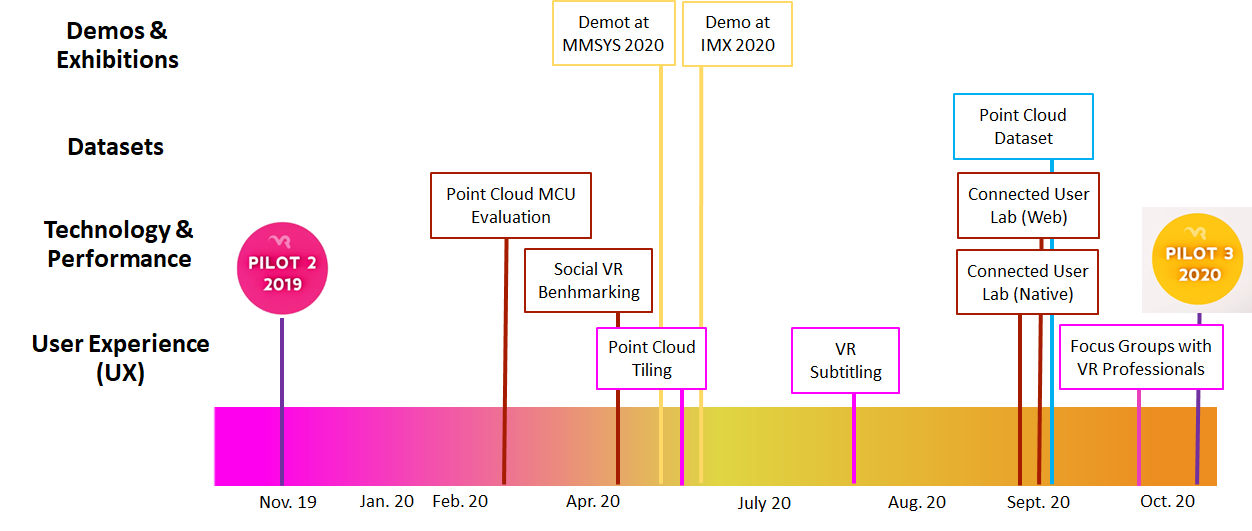

User Lab Calendar – Year 3

Key Pilot Actions (Year 3):

- Pilot 2: User tests (n=40 participants) in which both the pilot 1 and pilot 2 experiences were showcased. The results show that the new features provided by pilot 2, i.e. support for more than two users and a live presenter, provide an added value. The results also show that social VR can have a big impact in the broadcast sector.

- Point Cloud MCU Evaluation: Experiment that proves how how the introduction of the Point Cloud MCU provides significant benefits in terms of computational resources and bandwidth savings, thus alleviating the requirements at the client side in holoconferencing services when compared to a baseline condition without using it. Best Paper Award at ACM NOSSDAV 2020.

- Demo at ACM MMSYS 2020: Best Demo Award at ACM MMSYS 2020, where the multi-user Point Cloud pipeline was showcased.

- Connected User Labs: [Planned in October 2020] Experiments to validate the connectivity between the User Labs for both the web and native VR-Together platforms, and to obtain valuable performance metrics.

- Point Cloud Tiling: [Planned in October/November 2020] Experiments to optimize and validate delivery of point clouds using viewport adaptive streaming techniques

- Focus Groups with Professionals: [Planned in October/November 2020] Focus groups with VR experts to obtain valuable feedback on the project outcomes and next steps to maximize the impact.

- Pilot 3: [Planned in October/November 2020] User tests in which the pilot 3 experience will be showcased.

Details about all Year 3 pilot actions will be provided in Deliverable D4.6.

. The experiments conducted at/between the lab nodes have led to many high impact publications.