World’s First Volumetric Video Conference (point clouds) over a Public 5G Network: a medical emergency example

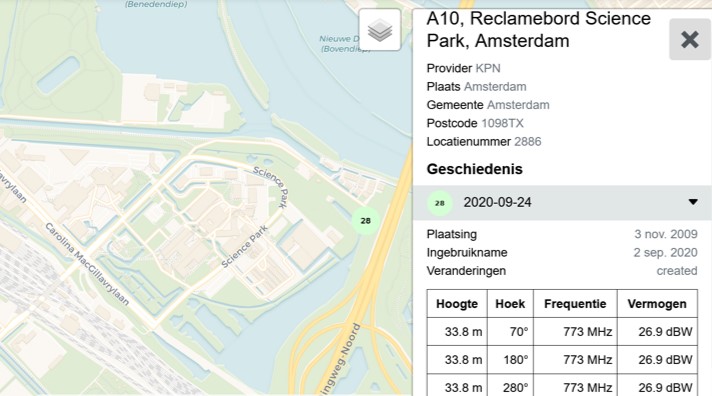

The doctor was captured at the VRDays stage using Azure Kinect cameras. In the same stage the experience was captured by a virtual camera, projected on a big screen and broadcasted in real time. The patient joined the session outside near Science Park in Amsterdam, was captured with a Samsung S20 Ultra 5G smartphone, and streamed over the standard 5G network of KPN in real-time.

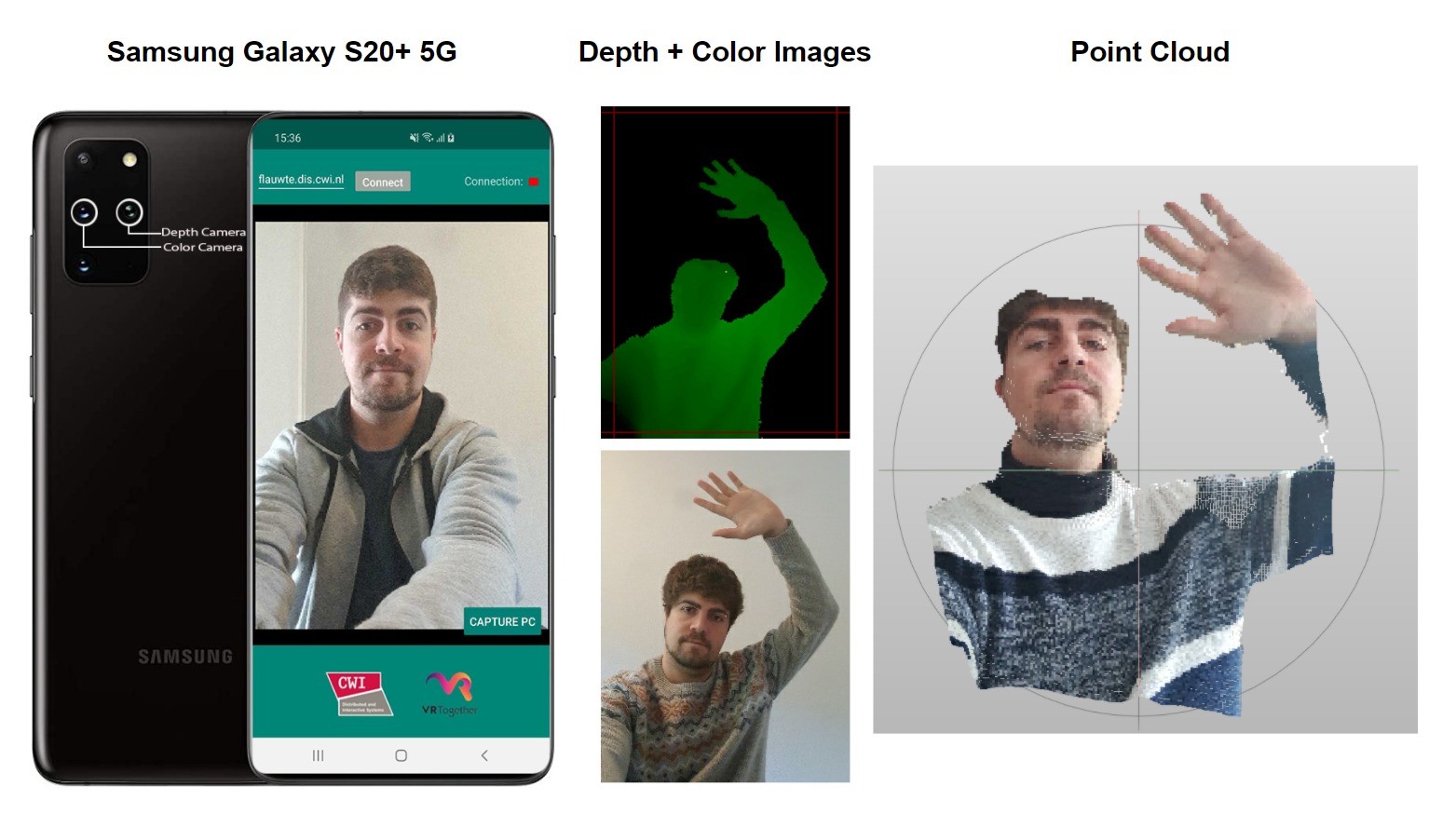

The capture application on the phone uses the depth and color cameras to capture depth and color frames. After applying some alignment transformations, a point cloud is computed using the information on both frames. For the demo, we used a depth resolution of 240×180 pixels which turned into an average of 20.000 points per frame. The streaming was performed at 20fps, which turns into a 51.2 Mbits/second stream, too big for streaming over a 4G network (typical uploading velocity around 25Mbits/s), but enough for a 5G network (even at 700MHz).

| Depth resolution | max points | bytes/point | bytes header | bytes body | TOTAL bytes/frame | fps | Mbytes/s | Mbits/s |

|---|---|---|---|---|---|---|---|---|

| 240×180 | 20000 | 16 | 24 | 320000 | 320024 | 20 | 6.40 | 51.20 |

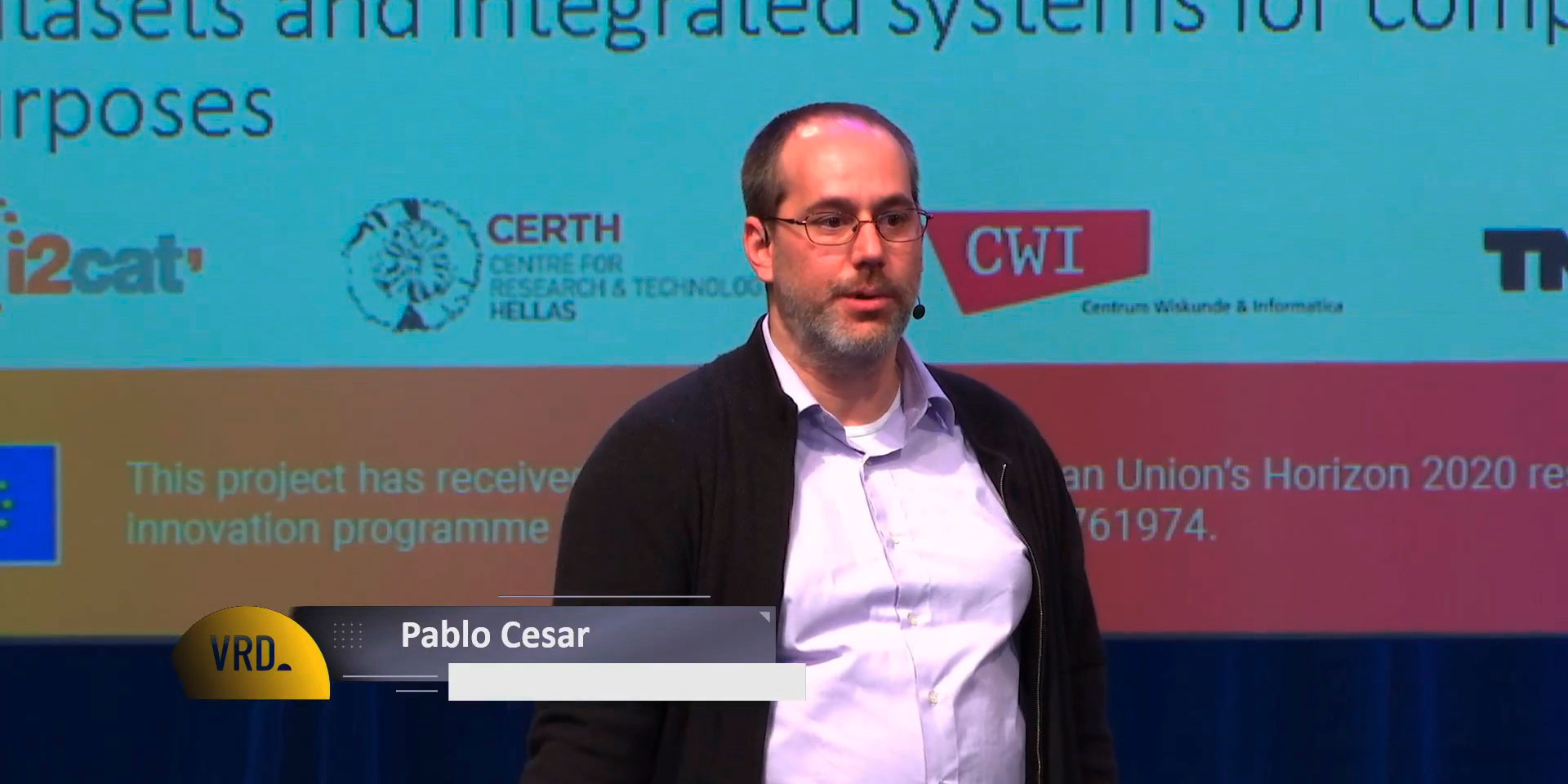

The VRTogether platform was used for orchestrating the experience. In the project we have developed an end-to-end pipeline for the delivery of volumetric video, as point clouds. The platform has three unique characteristics: it provides a real-time experience, so we can explore new forms of communication and collaboration using volumetric video; it allows for optimization mechanism based on the context of interaction and human behavior; and it is extensible so the technical components (capturing, compression, delivery, rendering) can be customized depending on the needs of the interaction. The platform uses an award winning compression algorithm for point clouds developed by the Distributed and Interactive Systems group at CWI.

VRDays is the leading European conference and exhibition on Virtual, Augmented and Mixed Reality content, creativity and innovation. The event took place at Amsterdam’s De Kromhouthal on Wednesday, 4th of November.

CWI’s Distributed and Interactive Systems research group focuses on facilitating and improving the way people access media and communicate with others and with the environment. They address key problems for society and science, resulting from the dense connectivity of content, people, and devices. The group uses recognized scientific methods, following a full-stack, experimental, and human-centered approach.

Sound helps people and businesses grow and prosper by unlocking their digital potential. They enable their customers to figure out technology through education & training, experiments and scaling new business models in order to lead their own way forward. Sound especially focuses on The future of Work, The future of Ethics, The future of Trust and the The future of Mobility. In this way VR is a perfect crossover in between the domain work and mobility.

Come and follow us in this VR journey with i2CAT, CWI, TNO, CERTH, Artanim, Viaccess-Orca, TheMo and Motion Spell.

This project has been funded by the European Commission as part of the H2020 program, under the grant agreement 762111.