IBC 2019 report: VR still leads the innovation

The IBC (International Broadcasting Convention) taking place at Amsterdam in September is still a major milestone for any company in the video technological industry and also for individual who wants to take part in the future of media. This show still appears to be the world’s most influential media & technology show. This year (2019), the quantity and high quality of focused exhibitors drove a large number of attendees (over 56 000) which is the largest of all previous years.

VRTogether is a world class consortium of research entities and companies looking at evaluating the “togetherness” in VR. The results have been so promising that IBC granted the consortium a free booth in 2018 and one of the largest booth of the Future Zone in 2019.

This article first presents VR Together’s demonstrations and summarizes the most interesting developments and innovations we experienced at IBC that will impact Social VR in the coming months and years.

VRTogether at IBC

The Future Zone brought together the very latest ideas, innovations and concept technologies from international industry and academia, and showcases them in a single specially curated area where innovators meet. Along with booths, This zone offered an open access to the IABM Future Trends Theater with an interesting program. Major trends this year included 8K, 5G, and immersive media (VR/AR/XR). The VR Together booth was ideally located in the center of the Zone and high visibility, generating a lot of attention.

The VRT project focused on the real-time photo-realistic 3D capture and the integration of a live-avatar into a several minutes scenario. The booth attendance was high and qualified and VRT project got lots of feedback from industry leaders, researchers, and tech wanderers who immediately perceived the potential of such a media for the future. Along with an ecological trend, from healthcare to manufacturing, from education to sport, from training to social gaming, all industries plan to use VR.

Beside this booth, other VRT partner presents a paper introducing some other outcome: a web-based social VR framework, that allows to rapidly develop, test and evaluate social VR experiences.

Based on this framework, the paper presents an evaluation of six user experiences in both 360-degree and 3D volumetric VR. The paper is accessible here:

Top star VR innovating technologies

Besides the VRT booth, there were many others relevant booths around VR. Some of them caught our attention.

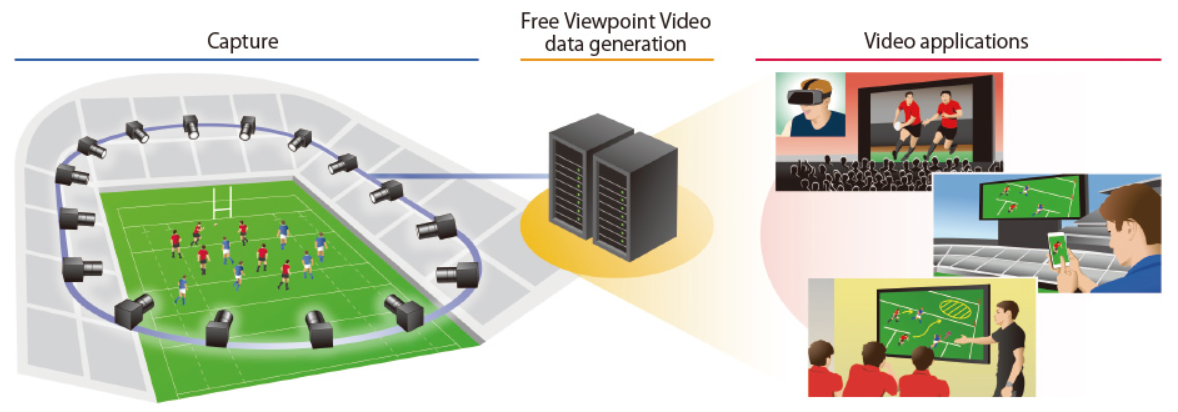

VR reconstruction for sport replays by canon

The Canon Group develops cutting-edge technologies to create a unique immersive experience placing the spectator in the middle of the action : the Free Viewpoint Video System. This system provides a video experience like never before using Canon’s unrivaled imaging technologies (https://global.canon/en/technology/frontier18.html)

You can view the same scene from various angles, changing to the perspective of an athlete on the field or any number of alternate viewpoints. Additionally, viewers can control both viewpoint and game time at will. This revolutionary technology dramatically changes how sports will be viewed.

OMAF4CLOUD: Standards-enabled 360° video creation as a service

Omnidirectional Media Format (OMAF) is a media standard for 360° media content developed by the Moving Picture Experts Group (MPEG). In order to address the needs of advanced media processing and delivery services, MPEG is developing a new standard called Network based Media Processing (NBMP), a standard that aims at increased media processing efficiency, faster and lower cost by leveraging the public, private or hybrid cloud services. MPEG presents a very interesting paper that covers both OMAF and NBMP standards. It also exposes an end-to-end design and proof of concept enabling immersive virtual reality experience to the end users: https://show.ibc.org/__media/Files/Tech%20Papers%202019/G3-201-Yu-You.pdf

Nokia: Real-time decoding and ar playback of the emerging MPEG video-based point cloud compression standard

Nokia presented the world’s first implementation of the upcoming MPEG standard for video-based point cloud compression (V-PCC) on today’s mobile hardware.

As ISO/IEC 23090-5 is about to be published as an international standard, this first V-PCC implementation is an important asset to prove its relevancy to the public.

Nokia, that won an award, describes in a paper all their works on this topic :

https://show.ibc.org/__media/Files/Tech%20Papers%202019/Sebastian-Schwarz.pdf

ImAc : Immersive Accessibility

The goal of Immersive Accessibility (ImAc), funded by the EU as part of the H2020 framework, is to explore how accessibility tools and access services can be integrated into immersive media and in particular 360-degree content. It is not acceptable that accessibility is regarded as an afterthought; rather it should be considered throughout the design, production and delivery process.

What are the next challenges?

Some professionals of the video industry see VR as a decreasing trend. From VRT project point of view, we don’t and we are not alone with this vision. Head mounted displays are cumbersome and this is the main reason why AR and VR crossed into XR.

One of the common denominator of many VR projects are their ability to handle 3D volumetric data, particularly to manage the compression of dynamic 3D point cloud data. The main philosophy behind V-PCC is to leverage existing video codecs for compressing the geometry and texture information of dynamic point clouds. This is essentially achieved by converting the point cloud into a set of different video sequences. In particular, three video sequences, one that captures the geometry information, one that captures the texture information of the point cloud data, and another that describes the occupancy in 3D space, are generated and compressed using existing video codecs, such as MPEG-4 AVC, HEVC, AV1, or similar.

V-PCC has seduced at IBC for several reasons:

1) The video based approach rely on existing video codecs (HEVC) and can benefit from existing hardware accelerations. MPEG provides a reference software.

2) The standardization part (codec and transport) will be soon finalized, by the end of 2019.

3) The compression ratio is incredible. The lack of material makes bandwidth predictions complex but we are talking of a few Mbps for a realistic human representation.

4) This approach is AR/VR compatible.

The only drawback at this stage is about the capture. Either the capture takes time, or it is expensive, or it is low quality. The main future challenge is actually to be able to capture, in real time, high quality 3D photo realistic volumetric data. We are actively looking for a solution. Don’t hesitate to share your thoughts with us!

Text: Marc Brelot, Romain Bouqueau (Motion Spell)

Come and follow us in this VR journey with i2CAT, CWI, TNO, CERTH, Artanim, Viaccess-Orca, Entropy Studio and Motion Spell.

This project has been funded by the European Commission as part of the H2020 program, under the grant agreement 762111.