New VR-Together Capture System based on cutting-edge technologies

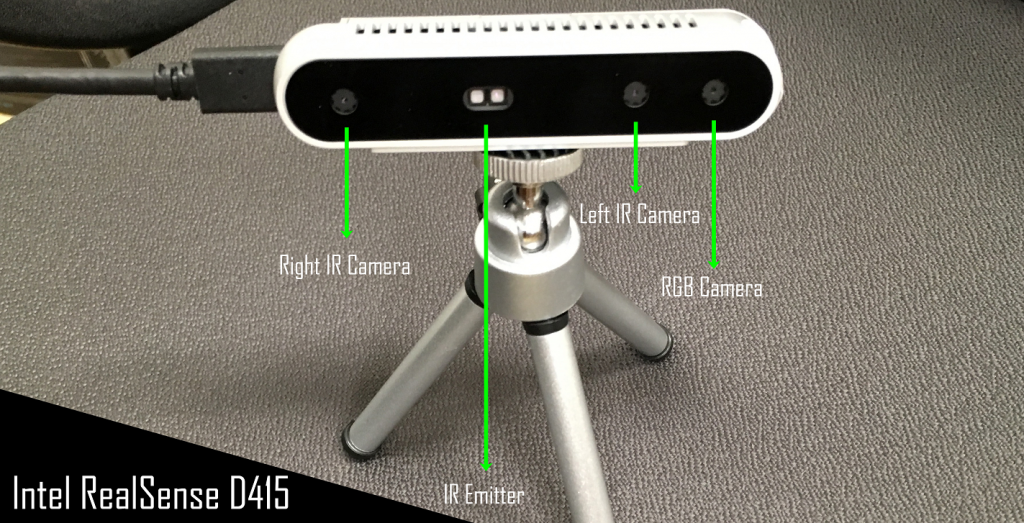

Microsoft announced the discontinuation of the Kinect for Xbox One device, the integrated depth-sensing device of the initial people 3D capture system. However, Intel recently released new high-tech depth-sensing devices, Intel RealSense D400-Series, providing depth data of high quality and accuracy as well as of high frame rate. CERTH has purchased 4 x D415 and 4 x D435 devices in order to integrate them to the system, offering higher hardware (HW) specifications to the VR-Together capture system.

Figure 1 Intel RealSense D415 devices used in CERTH’s laboratory using the Intel RealSense SDK viewer for visualizing the RGB and Depth streams in Full High Definition and in 1280×720 resolutions, respectively.

Moreover, Intel provides a Low-Level Device API with the means to take direct control of the individual device sensors.

- Each sensor has its own power management and control.

- Different sensors can be safely used from different applications and can only influence each other indirectly.

- Each sensor can offer one or more streams of data. Streams must be configured together and are usually dependent on each other.

- All sensors provide streaming as minimal capability, but each individual sensor can be extended to offer additional functionality.

- Intel RealSense D400 stereo module offers Advanced Mode functionality, letting you control the various ASIC registers responsible for depth generation.

Figure 2 A set of image sensors that enable capturing of disparity between images, a dedicated RGB image signal processor for image adjustments and scaling color data. An active infrared projector (emitter) to illuminate objects to enhance the depth data.

Beyond the hardware updates, CERTH has started to design and implement new versions of the sub-components integrated and used in the people 3D capture system.

New synchronization methods will be developed to better group and process the multiple RGB-D frames. Soft-HW and HW based approaches will be investigated to more accurately extract the 3D point cloud of the user, allowing for higher quality user 3D representation in the VR-Together platform.

Text and pictures: Argyris Chatzitofis, CERTH