Creating an Interactive VR Experience with the VRTogether platform

The third year of our project is rapidly approaching its end. Time flies when you’re having fun and all that, and we most definitely have not been sitting still. Besides overall platform improvements and other activities, we have been hard at work on our third year pilot, i.e. the projects we build on top of our platform to prove the technology. As we have reported before, the core of this pilot is all about interaction. This means interaction between users, with the virtual environment, with virtual characters and with an overarching storyline.

In terms of content production this has been the most extensive and challenging of our pilots. We have detailed the virtual character creation before, and our amazing partners of The Modern Cultural Productions (TheMo) have created an exciting script as well as a gorgeous virtual apartment where the mystery surrounding Elena Armova’s murder gets resolved.

Motion Capture in a Global Pandemic

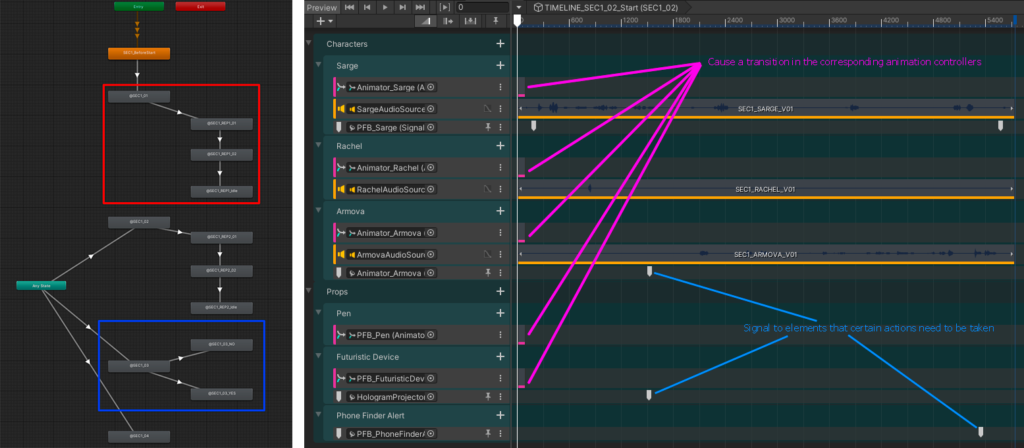

The pandemic we have all been facing this year caused us to schedule, reschedule, adapt and ultimately change our approach to the motion capture we planned to do of our actors. With the actors coming from Spain, and Artanim being located in Switzerland, we faced the fact that we would either incur unknown delays or we would have to get creative. Under normal conditions actors would come to our studio and get equipped with a mocap suit and markers for body mocap. At the same time a dedicated helmet with a holder for an iPhone and Reallusion’s LIVE FACE app is used for the facial mocap. This all combines nicely into the Character Creator ecosystem we use for our characters.

With travel restrictions and quarantine periods in place, we decided to split our mocap approach. Artanim prepared and supplied the necessary hardware and software for facial mocap and shipped it off to Spain. There the TheMo team and the original actors recorded the facial mocap, the actor audio as well as video reference footage. In the meantime at Artanim we arranged for local Swiss actors to come to our studio, where we captured their body movements as they played out the scenes in sync with the recorded reference footage.

While this did give us the raw data at a more reasonable timeframe, it also implied far more post-processing would be necessary. The various animations were mostly in sync, but required cleanup and fine-tuning. And of course not having the original actors recorded for their body movement meant that retargeting the animation, while a regular part of the process, was more involved as well. And to make our wonderful animator’s life even more challenging we decided to include more detailed finger animation and prop interaction to our characters for this third pilot. But all the effort was worth it, because the combined content in pilot 3 is a significant step up in terms of quality from the first pilot the consortium produced.

Supporting Multiplayer Interaction

With all content created the next step is putting it all together into an experience. At an early stage of development we realised that in contrast to the first two pilots, pilot 3 would need a lot more synchronization of state and events between clients. Our storyline is non linear, users interact with the environment, props and characters, and this requires careful orchestration to ensure each participant observes the same experience as the others in a session.

Unfortunately the networking solutions available for Unity – our engine of choice – all had various downsides. Built-in networking was in a deprecated state with no clear viable replacement. And third-party offerings often ran in the cloud, which would imply less control on our side and not being able to demonstrate or use our platform in local networks one might encounter at fairs and festivals.

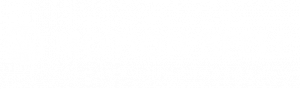

Looking for a solution it dawned upon us that our partners at Motion Spell and Viaccess-Orca were already developing their Orchestrator, which they have detailed in a series of 3 blog posts. It supported chat-like communication between users in a session. And with the addition of an event API as detailed in part 3, we realised we had all the building blocks we needed “in-house” to be able to support our experience. So we set out to develop a lightweight layer on top of the API to support a multiplayer experience in which one of the users acts as the authoritative server in charge of the session. Developers are able to freely create event message types to send data via the authoritative user to all others, and within custom components they can easily subscribe to messages of any type to handle them as they see fit.

Using this lightweight layer we were able to support all the functionality we needed, from simple event flow triggers to the synchronization of player locations, controller positions and interactions, and more complex experience object state. This system has proven useful beyond this pilot as well, by now being used to support various other functionalities throughout our platform.

Turning Ingredients into an Experience

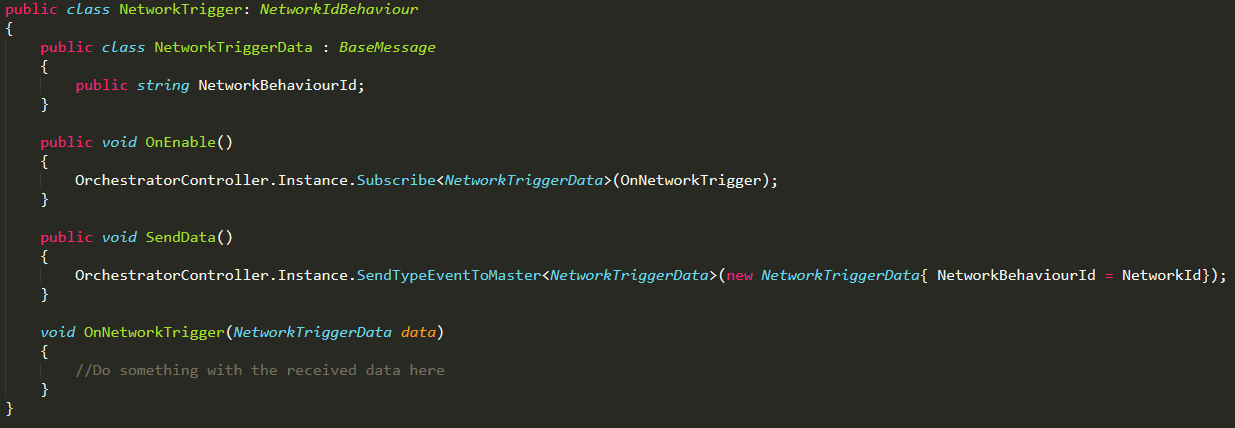

Unity provides 2 core mechanisms to create a narrative experience; Timelines and AnimationControllers. As the name indicates, a Timeline allows you to organize your assets over time. In our case this would include animations, character dialog, foley sounds, event triggers and a variety of other elements with a clear place in time. However, our experience is not fully linear given that the progress depends on user actions or their inaction. This implies that character animation can undergo a variety of transitions. And this aspect is better expressed in an AnimationController, which is a directed graph of animation clips in which transitions are expressed by directional edges and imply a smooth blending between the two animation clips. But those controllers are mainly for animation content only.

So we ended up with a mix of the two concepts. Our timelines contain independent sequences in which custom clip types trigger transitions within associated AnimationControllers. And the progression through the various timelines is triggered by a main experience control component.

In total Pilot 3 as of the last count has 18 animation controllers, 62 animation clips, 22 timelines and 31 synchronized triggers to drive a multiplayer experience with 4 characters in 3 rooms of the apartment over 4 scenes for a duration of about 10 minutes.

In terms of interaction we have integrated a number of different types. First of all grasp and touch. Driven by controller input – for example the Touch controllers of your Oculus headset – users have the ability to grab and touch objects. Grabbable objects can be held in your hand, while touchable objects such as a button can respond to a finger press. In our experience some elements of progression depend on such input, where the experience won’t progress if users don’t trigger the right events as they were instructed to do by the characters. And to make sure users are aware of what is expected from them, the characters will make sure to remind them with instructions of increasing urgency.

Further, at various stages throughout the experience, the characters will address the users with a yes or no question. By integrating keyword speech recognition in the experience, users can simply answer the appropriate “yes” or “no” out loud, which when recognized determines the response of the character and the animation sequence which follows.

The former two examples are very explicit interactions, a user is instructed or asked to take an action, and when they complete it this has an effect. We have however also integrated more subtle interactions such as gaze redirection. During moments of the experience where the script indicates our characters address the users, a gaze redirection module is activated. This module overrides the pre-recorded mocap data and ensures that the characters look directly at the most relevant user. This can be a user in close proximity to a specified target, or nearest to our character. The retargeting is performed within natural articulation limits and is subtle enough to go unnoticed by the user, but it adds a natural mode of engagement and a sense of being there.

Experiencing Pilot 3

With the Pilot 3 experience in place, from a production point of view we can say that the VRTogether platform has held up well and proved to be capable and flexible enough to support the more stringent requirements of an online multiplayer interactive VR experience.

From here on out several partners within the consortium plan to use pilot 3 to run a variety of user studies. And in the meantime we keep our fingers crossed for the global pandemic to clear up to a point where we can proudly show off what we have produced, and have you experience the final chapter in the mystery surrounding Elena Armova’s untimely departure.

Author: Bart Kevelham – Artanim

Come and follow us in this VR journey with i2CAT, CWI, TNO, CERTH, Artanim, Viaccess-Orca, TheMo and Motion Spell.

This project has been funded by the European Commission as part of the H2020 program, under the grant agreement 762111.