MPEG Meeting #126: Virtual Reality has the wind in its sails

VR-Together is an ambitious project aiming at pushing the boundaries of Social VR. This requires partners to keep up to date and participate in research and standardization activities.

VR-Together project partner Motion Spell joined the last MPEG meeting (#126) in Geneva. Following VR and Immersive Media activities, Motion Spell put in light many developments regarding video standardization that are quite relevant for the VR-Together project: MPEG-H, MPEG-I VVC, MPEG-5, and MPEG-I Immersive Video.

Video

In the Video coding area, the activities are quite intense since MPEG is currently developing specifications for 3 standards: MPEG-H, MPEG-I VVC and MPEG-5.

- MPEG-H: In Part 2 – High Efficiency Video Coding, the 4th edition specifies a new profile of HEVC which enables to encode single color plan video with some restriction on bits per sample, and which includes additional Supplemental Enhancement Information (SEI) messages.

- MPEG-I: In Part 3 – Versatile Video Coding, jointly developed with VCEG, MPEG is working on the new video compression standard after HEVC. This new standard that appears to be perceptually 50% better than HEVC, provides a high-level syntax allowing, for example, to access sub-parts of picture which is needed for future applications with scalability, GDR (gradual-decoding refresh) widely used for game streaming. Many other functionalities will be also provided like: coded picture regions, header info, parameters sets, access mechanisms, reference picture signaling, buffer management, capability signaling, sub profile signaling. A Comity Draft is expected in July 2019 and VVC standardization could reach FDIS stage in July 2010 for the core compression engine.

- MPEG-5: this set of technologies are still being heavily discussed, but MPEG has already obtained all technologies necessary to develop standards with the intended functionalities and performance from the Calls for Proposals (CfP).

- Part 1 – Essential Video Coding (EVC) will specify a video codec with two layers: layer 1 significantly improves over AVC but performs significantly less than HEVC, and layer 2 significantly improves over HEVC but performs significantly less than VVC. A working draft (WD) was submitted during the MPEG Meeting. Some evaluation of the reference software AV1 versus EVC has been started: EVC seems to be a bit faster for the same quality.

- Part 2 – Low Complexity Video Coding Enhancements (mainly pushed by V-Nova with improvements from Divideon) will specify a data stream structure defined by two component streams: stream 1 is decodable by a hardware decoder, and stream 2 can be decoded in software with sustainable power consumption. Stream 2 provides new features such as compression capability extension to existing codecs, lower encoding and decoding complexity, for on demand and live streaming applications. This new Low Complexity Enhancement Video Coding (LCEVC) standard is aimed at bridging the gaps between two successive generations of codecs by providing a codec-agile extension to existing video codecs that improves coding efficiency and can be readily deployed via software upgrade and with sustainable power consumption. The planned timeline foresees a CD in October 2019 and a FDIS a year after.

Since mid-2017 MPEG has started to work on MPEG-I (Coded Representation of Immersive Media) that targets future immersive applications. The goal of this new standard is to enable various forms of audio-visual immersion, including panoramic video with 2D and 3D audio, with various degrees of true 3D visual perception (leaning toward 6 degrees of freedom). MPEG evaluates responses to the Call for Proposals and starts a new project on Metadata for Immersive Video linked with the Three Degrees of Freedom Plus feature (3DoF+). This aspect might be very relevant to follow for the VRT Project.

Support for 360-degree video, also called omnidirectional video, has been standardized in the MPEG-I Part 2: Omnidirectional Media Format (OMAF; ISO/IEC 23090-2) and Supplemental Enhancement Information (SEI) messages for High Efficiency Video Coding (HEVC; ISO/IEC 23008-2). These standards can be used for delivering immersive visual content. However, rendering flat 360-degree video may generate visual discomfort when objects close to the viewer are rendered. The interactive parallax feature of Three Degrees of Freedom Plus (3DoF+) will provide viewers with visual content that more closely mimics natural vision, but within a limited range of viewer motion. A typical 3DoF+ use case is a user sitting on a chair (or similar position) looking at stereoscopic omnidirectional virtual reality (VR) content on a head mounted display (HMD) with the capability to move her head in any direction.

At its 126th meeting, MPEG received five responses to the Call for Proposals (CfP) on 3DoF+ Visual. Subjective evaluations showed that adding the interactive motion parallax to 360-degree video will be possible. Based on the subjective and objective evaluation, a new project was launched, which will be named Metadata for Immersive Video. A first version of a Working Draft (WD) and corresponding Test Model (TM) were designed to combine technical aspects from multiple responses to the call. The current schedule for the project anticipates Final Draft International Standard (FDIS) of ISO/IEC 23090-7 Immersive Metadata in July 2020.

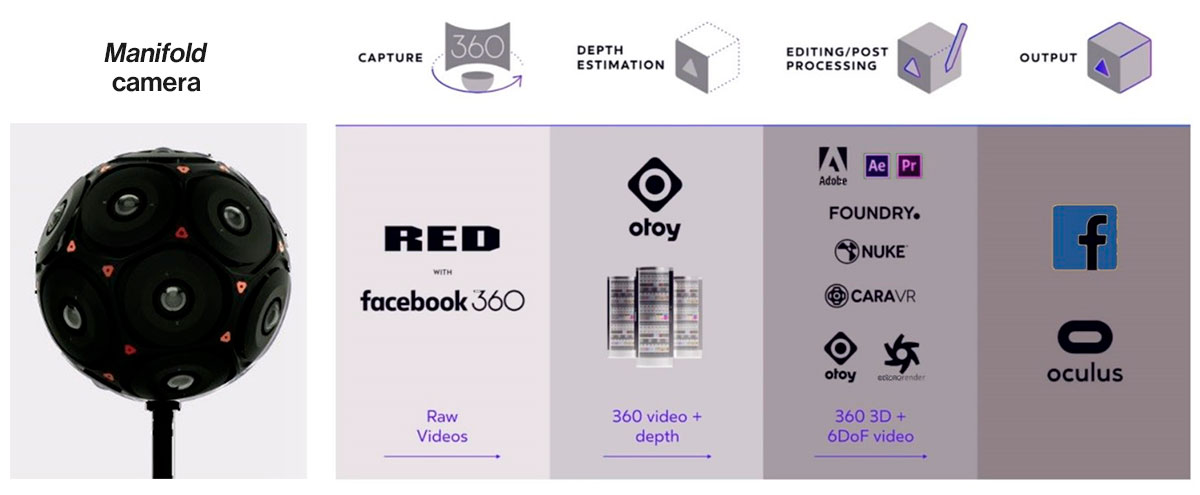

Beside this MPEG standardization process 3DoF+ Visual, one can mention a collaboration between Facebook and RED Digital Cinema started last year that lead to the first studio-ready camera system for immersive 3DoF+ storytelling based on a end-to-end solution for 3D and 360 video capture with the Manifold camera. Manifold is a single product that redefines immersive cinematography with an all-in-one capture and distribution framework, giving creative professionals complete ownership of their 3D video projects, from conception to curtain call. What this means for audiences is total narrative immersion in anything shot on the new camera system and viewed through 3DoF VR headsets. The schema below shows the process pipeline put in place first studio-ready camera system for immersive 3DoF+ storytelling :

Next MPEG meeting

The next meeting (127th) will be held on July 8-12, 2019 in Gothenburg, Sweden.

Come and follow us in this VR journey with i2CAT, CWI, TNO, CERTH, Artanim, Viaccess-Orca, Entropy Studio and Motion Spell.

This project has been funded by the European Commission as part of the H2020 program, under the grant agreement 762111.