A new pilot to push the boundaries of social VR

The VR-Together project is ready to take big steps forward in the year ahead. After a kick-starting pilot showing the main features of the project, we are currently working on a second trial which will further progress towards the objectives of VR-Together. Pilot 2 aims at improving the social virtual reality experience by focusing on three key goals:

- Creating the feeling of being there together by improving the feeling of immersion in the content and the feeling of togetherness;

- Adding a live factor to the content by generating and transmitting live the scene contents;

- Increasing the number of users supported by the VR-Together system, from 2 to 4 or more users.

This post goes through the different challenges and points out the elements introduced in VR-Together’s Pilot 2.

Content Scenario

The creation of virtual environments has a direct relationship with the content that wants to be shown and the purpose of its visualization. In this case, we are aiming at a live entertainment content consumption scenario, where the end user is not expected to interact with the content (passive watch) but it is expected to interact with other users. This somehow tries to simulate what an important piece of current TV audiences do daily, that is, watching TV together.

In order to follow the story started in pilot 1, the content scenario is expected to position end users in a TV studio, more specifically a news studio, where the news presenter will be giving an overview of the news of the day. At some point, with the objective of going in depth in one of the news, the end users will be holo-ported to a crime scene, or a place that has a direct relationship with such crime, where a journalist will describe the scene. Thus, the objective with such transition between sites is twofold: first, to immerse a group of users in an indoor environment, and secondly, in an outdoor environment.

Options to represent the virtual environment

To meet the objectives of Pilot 2 different options that mix heterogeneous media formats are under consideration. They are listed as:

Option 1 – Full 360

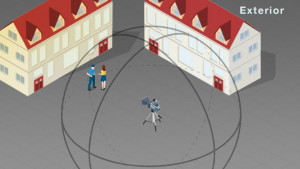

What the end user sees is basically a 360 video and user representations are somehow projected or visualized by means of side by side insertion. An option is to mix at production two half spheres, where each end user can see in the same scene presenter and journalist, both captured with their background with omnidirectional cameras or there could be a transition between the TV news set and the outdoor space.

Fig 1. Shooting is done with two 360 cameras, indoors in a TV studio (left image) and outdoors where the action of the story has happened (right image).

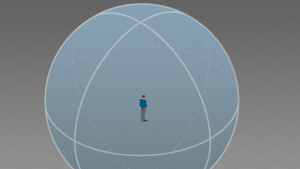

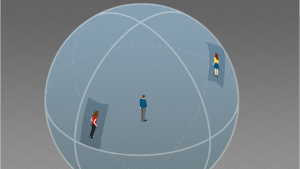

Fig 2. In a 360 video, each user is placed in the middle of the sphere (left image). This introduces different issues (views among users won’t be coherent, only 3DOF, insertion of other user representations may be very problematic). Merging side by side video streams with other user representations may be an option (right image), although projection of TVM or PC-based representations can be also considered.

In this first option – Full 360 – the production side of the content is “simple”, and we convey that both presenter and journalist are part of the shooting, that is, their visual representation is an intrinsic part of the 360 video(s). Very likely, the complexity of this configuration relies more on how end users are presented and represented, thus deriving in three main suboptions:

- Option 1.1 User representations are side by side video inserts, chroma or alternatively background removal being then required;

- Option 1.2 User representations are TVM or PC projections;

- Option 1.3 User representations are TVM or PC 3D insertions.

Following the same line of argumentation, we could also explore how self representation works in 360 environments having the same 3 options for self representation.

Option 2 – Full 3D

What the end user sees is basically a 3D environment, representing both scene and users with a mixture of formats, but anchoring the experience into a 3D scene (a TV set or an outdoors location), CGI-based and made of 3D objects.

Fig 3. A capture of what is currently being done by a weather channel. The environment is full 3D, while the character, a presenter on a chroma in this case, is a 2D video insertion. This image shows what is the objective of VR-Together, but of course, in a 3DOF+ environment where camera position and pre-rendering farms are not applicable, as it can be done in a framed TV context where camera position and movements can be prefixed and executed when the presenter is acting live.

As already done in pilot 1, end users can be represented using the different formats envisaged by the project (RGBD video, PCs or TVMs) and under this full 3D option, the major differences, and options to be followed, come when we try to figure out how a live action is going to be captured, transmitted and displayed, since the rendering of 3D user representations in a 3D environment is not an issue (well, it is, but a technical one, not a production or user experience one). The possibilities considered by the consortium are three: using billboard representations, mono or stereo, as in Figure 3; or using pre-rigged characters animated remotely live with a MoCap system; or using the same capture systems we have in place for end users but in this case for characters acting on the scene. In particular:

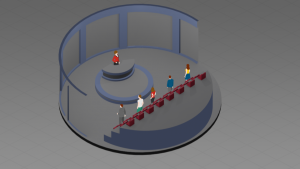

- Option 2.1- Full 3D with billboards character representations. As already tested in Pilot 1, and as a natural continuation of the technology implemented in the previous stage of the project, the more comfortable and technically feasible configuration is to build two full 3D environments, a TV-set and an outdoor space, where presenter and journalist are placed as video billboards (mono or stereo) that can be captured, transmitted and inserted live in both 3D environments.

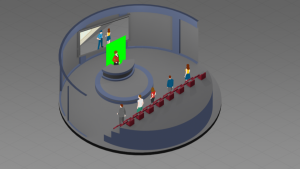

Fig 4. Recreation of a video insertion of characters on chroma into a 3D environment.

- Option 2.2- Full 3D with pre-rigged character representations. The difference with option 2.1 is how the acting characters are represented. In this case instead of video billboards, high-quality 3D pre-rigged characters would be used, animated live with a motion capture system. Equipped with a motion capture suit, the actor’s movements are captured and a skeleton is reconstructed. This skeleton data is subsequently streamed to all interested components, and used to drive the character’s animation on the receiving end. While a dedicated motion capture studio with Vicon cameras and software is available and can be used for the motion capture (as was the case for the characters of Pilot 1), a more flexible alternative in the form of an Xsens MVN setup is also available. The latter, based on inertial sensor technology, allows for more flexibility and easier transport, particularly when demonstrating the full solution at fairs or conferences.

- Option 2.3- Full 3D with TVMs or Point Cloud-based character representations uses the 4D capture systems being developed in the project to do a real-time capture of presenter and journalist, that will be integrated in the 3D environments as any other end user.

Fig 5. Recreation of 3D characters (pre-rigged and animated with MoCap or TVM or PC based) of characters on chroma into a 3D environment.

Option 3 – Mixing 3D and 180 (Oculus Venues style)

It almost replicates what Oculus Venues is already doing. This means mixing a 3D space, where users are placed and can interact, and 180 content, with a transition in the limit between the two content formats. As an added value to what Oculus Venues is already doing, VR-Together would use photo-realistic end-user representations.

Fig 6. A football match as seen in Oculus Venues (left) and a recreation of VR-Together proposal, with more realistic characters that would be basically TVMs or PCs.

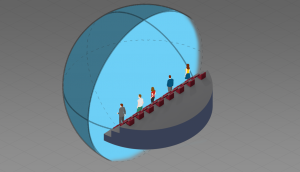

Fig 7. The figure tries to depict half sphere (180 degrees), situating the center of the sphere close to the user’s position. Notice the degradation of the half sphere in its corners, so there is a transition between the 3D environment where the users are placed, and the video “screen/dome” where users visualize the content. Although 180 (or 360 video) is 3DOF, a parallax effect can be created if 3D objects are positioned between the user’s eyes and the video dome. Thus, 3D objects representing other users, chairs, maybe the presenter or the journalist can give the feeling of being in a 3DOF+ environment.

Option 4 – Mixing wide angle video window and 3D

One of the main open questions that we have with regards to option 3(.1) is that 180 content per se will never react according a 3DOF+ or 6DOF environment. To soften the lack of parallax when visualizing such contents, 3D objects can be inserted between end user positions and the video content being displayed, but being the 180 dome so close, our hypothesis is that this will affect the feeling of immersion. A variation of what is proposed in option 4 (or 3.2) would be to consider directive videos, to depict live actions that are happening (and are being positioned) sufficiently far from the user’s eyes, and being them insertable in a framed context or behind a fictional frame.

Let’s consider a theater as an example. We can shoot an opera from seat 20 for instance, and crop and insert the resulting video in the stage of a virtual theatre, right behind the curtains. Will the VR users, sitting far enough from the stage, feel the difference? Is this better or worst than having a 180 video of the stage and part of the seating area that will be later substituted by 3D seating area? Since we are also considering an outdoor space for our fiction, let’s now consider the same for an action happening at the end of a tunnel, or a street, or anything that allow 3D artists seamlessly integrate both contents (a 3D scene and a directive video).

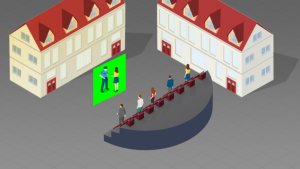

Fig 8. The figure tries to depict a directive video projected on a flat object behind the buildings. A parallax effect is created by the buildings and street lights while the video represents an action happening in the next crossing of the street.

Further discussions are being undertaken by the consortium, considering the pros and cons of each option from the production, implementation and user experience perspectives. Stay tuned for some exciting updates coming your way in the next weeks!

Come and follow us in this VR journey with i2CAT, CWI, TNO, CERTH, Artanim, Viaccess-Orca, Entropy Studio and Motion Spell.

This project has been funded by the European Commission as part of the H2020 program, under the grant agreement 762111.